I recently had the opportunity to educate myself a bit about Censorship Resistance Systems (CRS) and wanted to share my understanding to make it easier for those who are interested in this topic. In this blog post, I will summarize the SoK: Making Sense of Censorship Resistance Systems paper and the corresponding 15-minute talk at the Privacy Enhancing Technologies Symposium, both of which greatly helped me grasp this subject. I highly recommend reading the paper or watching the talk for a more in-depth understanding.

Before we dive in, I’d like to emphasize that while I’ve gathered valuable insights, I’m by no means an expert in this field. So please take everything you read here with a grain of salt.

The paper makes several contributions, including:

- Providing a censorship model

- Provide a framework on which they evaluate CRS

- Evaluating 73 available CRSs

- Identifying research gaps in the field

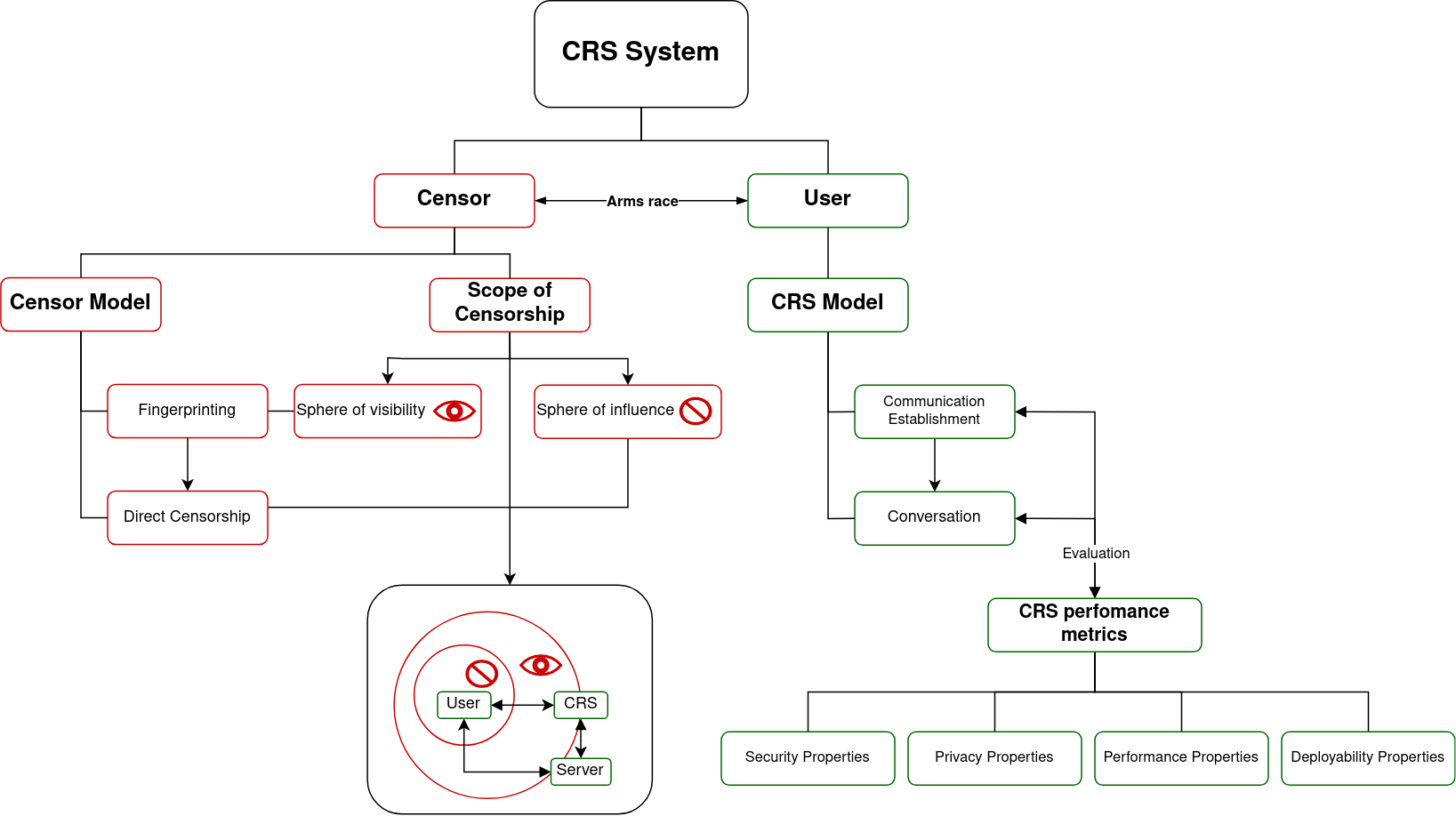

To provide a visual overview, here’s a mind map:

Introduction

When discussing censorship we have 2 parties involved. On the one hand we have the censor who aims to censor specific content they disagree with (while not interrupting the flow of content they agree with). On the other hand we have Users who want to access censored content. Additionally, there are Publishers who provide the content that the censor attempts to restrict. The goal of a CRS is to enable users to access censored content and empower publishers to distribute their content freely.

We might also want the CRS to protect the identity of the user and publisher in case any information get leaked. We probably also don’t want the CRS to be to slow and we might want it to scale well. These and other desirable functionalities of CRS systems will be defined later in this post.

Arms race

If we think of the power of censor we can say that the censor has two spheres in which they can operate. The censor does have a sphere of visibility and influence in which they can either observes unwanted behavior (sphere of visibility) or exert restrictions (sphere of influence).

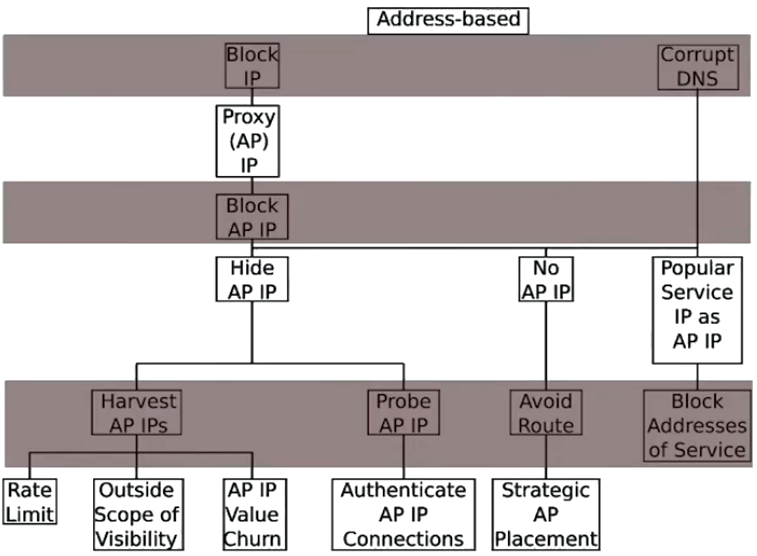

If the censor wants to block certain content that is within their sphere of influence there are some straight forward ways of doing so. However, users and publishers can respond by employing measures to circumvent the censor’s restrictions. For example, the censor might clock certain IP addresses which could be circumvented by using a proxy in a location that does not block these IP addresses. This might lead the censor to block the IP address of the proxy which again can be circumvented by other measures like Hiding the IP address.

This back-and-forth between the censor and users becomes increasingly costly for both sides.

The censor is interested in blocking as much censored material while not disrupting uncensored traffic. There is a natural tradeoff between these two goals.

To evaluate how well a censor blocks traffic, we can examine:

- False negatives FNR (not blocking something that should have been blocked)

- False positives FPR (blocking something that should not be blocked)

The ideal scenario for the censor is zero FNR and zero FPR, indicating a perfectly working censor model. On the other hand, the ideal scenario for a CRS is that the censorship system fails entirely, resulting in either false negatives or false positives.

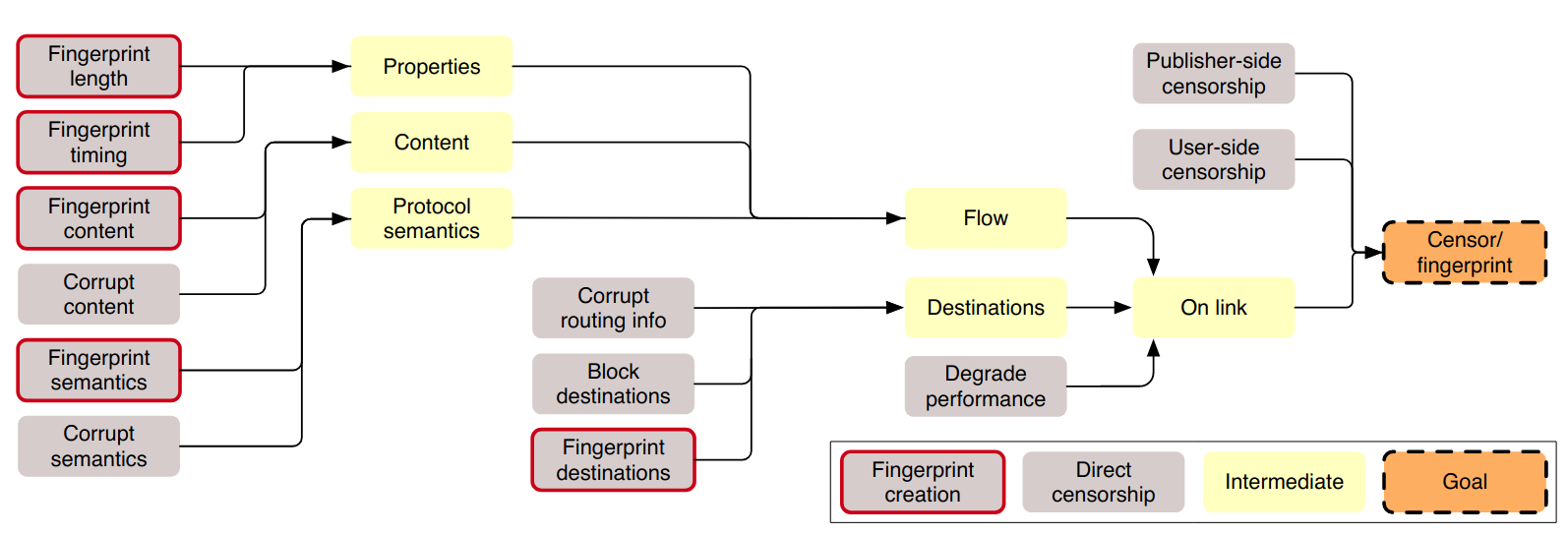

Censor Attack Model

To establish a common attack model applicable to all CRSs, the authors of the paper divide the censor’s capabilities into two stages:

- Fingerprinting

- Direct Censorship

First the censor must be able to find distinguisher’s between the content they want to block and the content that should not be censored. This process is known as Fingerprinting, which involves various techniques such as fingerprinting destinations (IP address, domain name), content (protocol-specific strings, blacklisted keywords), flow properties (packet length, timing-related), and protocol semantics (manipulating protocol behavior).

Once the censor can distinguish censored content, they need to find a way to block it. Direct Censorship techniques include user-side censorship (installing censorship software on the user’s machine), publisher-side censorship (installing censorship software, removing or corrupting published content), degrading performance (introducing delays), blocking destinations (blocking IP addresses), corrupting routing information, corrupting flow content (inject HTTP 404 Not Found), and protocol semantics (injecting forged TCP reset packets into a flow).

The paper provides an overview of these techniques and how they interplay:

Censorship Resistance Model

To effectively understand and evaluate Censorship Resistance Systems (CRS), it is essential to establish a model that encompasses the different phases and components of these systems. The CRS model consists of two primary phases: Communication Establishment and Conversation.

During the Communication Establishment the user receives a dissemination link that serves as the gateway to connect them to the CRS server. The Conversation phase focuses on facilitating communication between the CRS server and the publisher.

An example of this might be that the CRS Server is a proxy (this proxy will be responsible for the conversation) and in order to access the proxy a user must first acquire credentials (Communication Establishment).

Systemizing CRS

Systemizing CRS involves categorizing and defining specific properties that CRS systems should possess. The paper identifies four main categories of properties: Security, Privacy, Performance, and Deployability. Each category encompasses several sub-properties, as outlined in the table below:

Each of those categoieres have certain sub classes that can be seen in this table:

| Category Name | Property | Description |

|---|---|---|

| Security Properties | ||

| Unobservability | Ensures that the communication remains undetectable by the censor through techniques such as content obfuscation, flow obfuscation, or destination obfuscation. | |

| Unblockability | Makes it difficult or costly for the censor to block the CRS system, either due to its location outside the censor’s influence or its widespread use. | |

| Availability | Resistance against Distributed Denial of Service (DDoS) attacks. | |

| Communication Integrity. | Ensures integrity, authenticity, and support for handling packet loss and out-of-order packets. | |

| Privacy Properties | ||

| User Anonymity | Maintains user anonymity within the CRS system, preventing tracking and identification. | |

| Server/Publisher Anonymity | Preserves anonymity for servers and publishers, preventing their tracking and identification. | |

| User Deniability | Ensures that the censor cannot confirm the user’s intentional use of the CRS system. | |

| Server/Publisher Deniability | Prevents the censor from confirming that the server or publisher intentionally used the CRS system. | |

| Participant Deniability | Ensures that parties participating in or supporting the CRS system are not implicated by the censor. | |

| Performance Properties | ||

| Latency | Measures the time between the initialization of communication and the actual transmission. | |

| Goodput | Evaluates the usable bandwidth for information transfer within the CRS system. | |

| Stability | Assesses the scalability and stability of the CRS system. | |

| Computational Overhead | Considers the computational resources required by CRS system participants. | |

| Storage Overhead | Evaluates the storage requirements of the CRS system. | |

| Deployability Properties | ||

| Synchronicity | Determines whether all participants need to be online simultaneously for conversations within the CRS system. | |

| Network Agnostic | Considers whether the CRS system is agnostic to specific network assumptions. | |

| Coverage | Evaluates the accessibility of the CRS system across different continents and its coverage within each continent. | |

| Participation | Considers the level of cooperation required from CRS system participants. |

With these defined properties, CRS systems can be evaluated and compared against each other based on their performance. The authors evaluate both phases (Communication Establishment and Conversation) and see how each of the CRSs perform in each category.

Communication Establishment

The authors proceed to categorize the CRS into five distinct subcategories based on how they achieve the communication establishment.

-

High Churn Access

These systems employ regularly changing credentials to avoid being blocked by the censor. -

Rate-Limited Access

This subcategory focuses on reducing the speed at which CRS credentials can be acquired by either requiring Proof of Work or Timing Partitioning. -

Active Probing Resistance Schemes

The CRS systems aim to evade probing attempts by the censor by. One technique is Obfuscating Aliveness where they do not respond until a specific sequence of steps is completed Another method is Obfuscating Service where they delay engaging in the CRS protocol until certain conditions are met. -

Trust-based Access

The CRS only reply’s to connections they trust due to previous behavior or reputation.

Discussion of results

The authors provide a comprehensive table on page 12, offering an overview of the tested CRS systems. They also provide a detailed discussion of their evaluation results, which can be summarized as follows:

Response after validation (active probing resistance, proof of work)

CRS systems employing active probing resistance and proof of work mechanisms exhibited the following issues:

- Vulnerability to flow fingerprinting

- Increased time required to join the CRS

- Higher computational needs

- Additional storage requirements

Trust-based and high churn access

Schemes based on trust-based access and high churn access demonstrated the benefit of low latency. However, they also exhibited the following drawbacks:

- Lack of content obfuscation

- Lack of flow obfuscation

High churn access

High churn access schemes suffer some issues especially:

- Lack of stability and scalability due to reliance on volunteer participants who may drop out unpredictably

- Insufficient privacy and security in the presence of malicious participants

Issues for most schemes

Most CRS schemes share common limitations, such as:

- Lack of authentication for the dissemination CRS

- Not ensuring user anonymity (in cases where the censor is aware of CRS servers, it can observe all users connecting to them, however many schemes offer user and server deniability)

Conversation

The second stage of the CRS system is to establish the conversation. The authors also proceed to divide the CRS systems into 2 schemes and then evaluate each of them using the properties discussed in the Systemizing CRS section. The authors divide the CRS into Access-Centric Schemes which is used by users that want to access content and Publication-Centric Scheme which should provide publishers with the ability to distribute content. Each of those schemes is then broken down into some sub-schemes.

Access-Centric Schemes

- Mimicry

Make traffic look like whitelisted communication by acting like an unblocked protocol. - Tunneling

Traffic is tunneled though and unblocked application. - Covert Channel

Traffic is hidden by using a channel that is not intended to be used for communication (for example hiding traffic in images using steganography) - Traffic Manipulation

Using the limitations of the censors traffic analysis making even plaintext traffic unobservable. - Destination Obfuscation

Relaying traffic though intermediate nodes to obfuscate the destination.

Publication-Centric Scheme

- Content Redundancy

Store content on multiple CRS severs in case one gets taken down. - Distributed Content Storage

Split content into smaller chunks and store it on multiple CRS servers (not a single servers stores the entire document).

Discussion of results

The authors evaluated 62 systems on the basis of the 4 categories (Security, Privacy, Performance and Deployability). They provide a comprehensive table that illustrates the performance of different systems in each category. Furthermore, they also discuss the results and explain how each scheme has its own strengths and limitations, and the choice depends on the specific goals and requirements of the censorship resistance system.

To summarize their findings for each scheme.

Mimicry schemes

Mimicry schemes provide both content obfuscation and traffic flow obfuscation, but their effectiveness can be compromised due to the inherent complexities of mimicking cover protocols. These schemes may offer user deniability but may fall short in terms of destination obfuscation and some privacy properties.

Tunnelling schemes

Tunnelling systems typically rely on popular third-party platforms (to increase collateral damage), but they suffer from protocol overhead, decreased goodput, and increased latency.

Proxy-based schemes

Proxy-based schemes have the highest number if available tools for end users.

Due to routing the traffic through multiple proxies the latency increases.

Some schemes that use decoy routing which requires a cooperative ISPs which poses a deployment challenge (and might be a big assumption depending on the censor).

Traffic manipulation schemes

Due to the fact that traffic manipulation schemes make use of networks tricks which causes the censor to fail to detect block content they are susceptible to out-of-order packets. In case of censorship policy changes, this scheme may not work.

Covert channel-based systems

The benefit of them is that they provide unobservable communication and a high degree of privacy. However they face challenges related to the limited amount of information they can carry, resulting in low goodput. Covert channels are also vulnerable to dropped and out of order packet attacks.

Publication-centric schemes

There is a limited number of publication centric schemes (only two tools were available). These schemes aim to increase availability of stored information while offering deniability.

Open Areas and Research Challenges

In this section, the authors discuss potential future work and highlight areas that require further exploration.

Modular System Design

One area of interest is the development of more modular CRS systems. While many CRS systems perform well in certain categories like security, privacy, etc., most do not offer the possibility of extending the system.If CRSs would be more modular it might be possible to combine them to achieve a better overall system. It may be possible to combine CRSs to achieve a better overall system if they were more modular. However, the authors acknowledge the challenges associated with this approach, as it requires careful consideration to avoid breaking underlying assumptions of individual CRS systems.

Revisiting Common Assumptions

The authors suggest revisiting the common assumptions made by CRSs regarding the capabilities of censors and the existence of CRS components beyond the censor’s influence. These assumptions may not necessarily hold true or may lack sufficient data for proper evaluation. For example, assessing the technological capabilities of a censor may be difficult without comprehensive data. Therefore, it is essential to reevaluate and update these assumptions.

Security Gaps

The authors outlines 3 security gaps that were found during their research

- Poising CRS information (corrupt routing information)

- No prevention against DDOS attacks

- Protection against malicious CRS participants

Considerations for Participation

Many schemes rely on volunteers who have limited control over their level of participation, such as bandwidth or the number of connections they can provide. Additionally, there is a trend of CRS systems relying on commercial participants. Due to the high collateral damage, theoretically the censor should be deterred from blocking this service. However, this also presents a single point of failure.

Conclusion

In conclusion, the authors emphasize the open areas and challenges that current CRS face. They highlight the need for modular system design, reassessment of common assumptions, addressing security gaps, and considerations for volunteer participation. By addressing these challenges, future CRS can be more effective in countering censorship and promoting freedom of information.